A good way to understand the wayland architecture and how it is different from X is to follow an event from the input device to the point where the change it affects appears on screen.

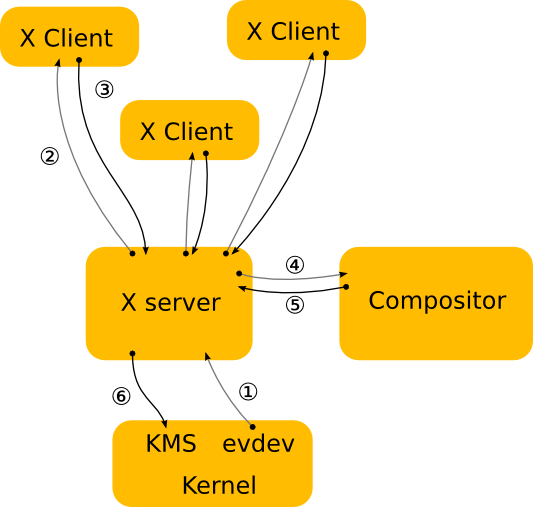

This is where we are now with X:

As suggested above, there are a few problems with this approach. The X server doesn't have the information to decide which window should receive the event, nor can it transform the screen coordinates to window-local coordinates. And even though X has handed responsibility for the final painting of the screen to the compositing manager, X still controls the front buffer and modesetting. Most of the complexity that the X server used to handle is now available in the kernel or self contained libraries (KMS, evdev, mesa, fontconfig, freetype, cairo, Qt, etc). In general, the X server is now just a middle man that introduces an extra step between applications and the compositor and an extra step between the compositor and the hardware.

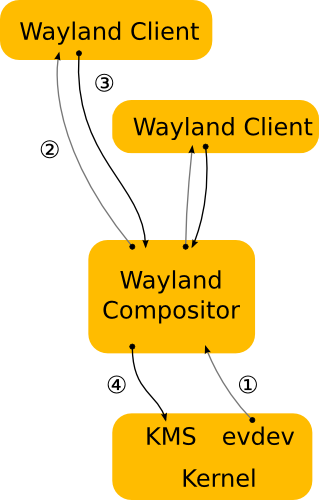

In wayland the compositor is the display server. We transfer the control of KMS and evdev to the compositor. The wayland protocol lets the compositor send the input events directly to the clients and lets the client send the damage event directly to the compositor:

One of the details I left out in the above overview is how clients actually render under wayland. By removing the X server from the picture we also removed the mechanism by which X clients typically render. But there's another mechanism that we're already using with DRI2 under X: direct rendering. With direct rendering, the client and the server share a video memory buffer. The client links to a rendering library such as OpenGL that knows how to program the hardware and renders directly into the buffer. The compositor in turn can take the buffer and use it as a texture when it composites the desktop. After the initial setup, the client only needs to tell the compositor which buffer to use and when and where it has rendered new content into it.

This leaves an application with two ways to update its window contents:

In either case, the application must tell the compositor which area of the surface holds new contents. When the application renders directly to the shared buffer, the compositor needs to be noticed that there is new content. But also when exchanging buffers, the compositor doesn't assume anything changed, and needs a request from the application before it will repaint the desktop. The idea that even if an application passes a new buffer to the compositor, only a small part of the buffer may be different, like a blinking cursor or a spinner.

Typically, hardware enabling includes modesetting/display and EGL/GLES2. On top of that, Wayland needs a way to share buffers efficiently between processes. There are two sides to that, the client side and the server side.

On the client side we've defined a Wayland EGL platform. In the EGL model, that consists of the native types (EGLNativeDisplayType, EGLNativeWindowType and EGLNativePixmapType) and a way to create those types. In other words, it's the glue code that binds the EGL stack and its buffer sharing mechanism to the generic Wayland API. The EGL stack is expected to provide an implementation of the Wayland EGL platform. The full API is in the wayland-egl.h header. The open source implementation in the mesa EGL stack is in platform_wayland.c.

Under the hood, the EGL stack is expected to define a vendor-specific protocol extension that lets the client side EGL stack communicate buffer details with the compositor in order to share buffers. The point of the wayland-egl.h API is to abstract that away and just let the client create an EGLSurface for a Wayland surface and start rendering. The open source stack uses the drm Wayland extension, which lets the client discover the drm device to use and authenticate and then share drm (GEM) buffers with the compositor.

The server side of Wayland is the compositor and core UX for the vertical, typically integrating task switcher, app launcher, lock screen in one monolithic application. The server runs on top of a modesetting API (kernel modesetting, OpenWF Display or similar) and composites the final UI using a mix of EGL/GLES2 compositor and hardware overlays if available. Enabling modesetting, EGL/GLES2 and overlays is something that should be part of standard hardware bringup. The extra requirement for Wayland enabling is the EGL_WL_bind_wayland_display extension that lets the compositor create an EGLImage from a generic Wayland shared buffer. It's similar to the EGL_KHR_image_pixmap extension to create an EGLImage from an X pixmap.

The extension has a setup step where you have to bind the EGL display to a Wayland display. Then as the compositor receives generic Wayland buffers from the clients (typically when the client calls eglSwapBuffers), it will be able to pass the struct wl_buffer pointer to eglCreateImageKHR as the EGLClientBuffer argument and with EGL_WAYLAND_BUFFER_WL as the target. This will create an EGLImage, which can then be used by the compositor as a texture or passed to the modesetting code to use as an overlay plane. Again, this is implemented by the vendor specific protocol extension, which on the server side will receive the driver specific details about the shared buffer and turn that into an EGL image when the user calls eglCreateImageKHR.